A company’s data centre is central and pivotal to its business operations. The physical size of the data centre is dependent on the type and size of the company itself and can range from a single rack of equipment right through to a several hundred thousand square feet stand-alone facility.

Data Centre Services

- Data Centre Facility Management Services

- Data Centre Cleaning

- Build Handover Cleans

- Data Wire Cabinet Cleaning

To ensure that this article is as descriptive as possible, the information below typically relates to those data centres that are substantial in size (and not just a single rack).

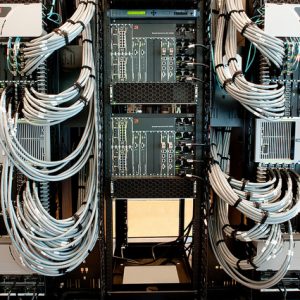

Flickr – Cisco Pics

What is a data centre?

A data centre is a dedicated facility where computer systems, data storage and associated telecommunications equipment are located as part of the information communication technology (ICT) infrastructure. This ICT infrastructure is typically located within one or more data halls (sometimes called computer rooms) and is powered by electrical systems and cooled by mechanical systems. This Mechanical & Electrical (M&E) equipment is located in rooms adjacent or close to the computer room(s).

If you entered a large data centre you may encounter the following main elements:

-

- Security throughout the facility with specific focus at the personnel and vehicle entrances

- Reception area – to confirm staff and visitors’ credentials and belongings

- ICT Equipment Room(s) – typically called data halls or computer rooms

- Telecommunications Room(s) – where telecoms carriers’ cables enter the building

- Electrical Rooms

- Mechanical Rooms (containing the air conditioning equipment)

- Battery Room(s) (in case of power failure)

- Generators (in case of power failure)

- Fire detection and suppression systems

- Operations Control Room

Flickr – Brocade Photos

Data Centre Considerations

Transaction Processing & Storage of Data – The main function of a data centre is to support the day to day computing needs of its business departments and transaction processing when dealing with their customers. The scale of operation can range from the support of a small retail operation to a major global bank to the storage of messages, pictures and videos of a social media company.

Resilience – Dependent on the criticality of the operations run within a data centre, it is common to find duplications of all types of computer equipment, storage and networking systems. This would include dual entrances into the building of one or more telecoms carriers. There are also varying degrees of power and cooling resiliency – the more resilience, the more likely data centre operations will run effectively and provide customers with an uninterrupted service throughout the year.

Security – Two aspects of security exist – software and physical:

- Software; there are many and varied software methods employed to reduce or stop fraudulent access to sensitive and confidential information. These help to combat activities such as malicious intent to defraud, software viruses, hacking and phishing.

- Physical; this element addresses the potential for unauthorised access into premises. Many methods are used including effective design of the site, sufficient lighting, perimeter fencing, doors, windows and strategically placed bollards etc. In addition, a security lodge at the entrance of the site with trained guards will control specialised gates for personnel and vehicles, electronic access control, CCTV, motion detection and intercom systems all add to the mechanisms to aid security.

As a rule, the more critical and extensive the software and hardware become, the more time and effort are required to provide optimal protection.

Fire Detection and Suppression – As all data centres consist of areas that contain IT equipment, electrical and cooling systems, there is a potential for a fire to break out in any location. There are a number of methods that are used to detect smoke and, ultimately fire. With the use of automated alerts and alarms, these can trigger the necessary fire suppression system to minimise and isolate the spread of damage and ensure operations can recommence as soon as possible.

Equipment Maintenance – In certain circumstances, there may be times when customer services are potentially interrupted due to major upgrades or regular maintenance of key equipment housed within the data centre. During these activities, in-built resilience may be reduced but efforts will always be made to schedule them when there is least impact to the customer service – typically overnight.

Technology Advances – As the dependency of computing power and storage increases year on year, the physical amount of IT equipment needs to increase to accommodate these new requirements. However, due to technological advances where servers and storage are becoming both faster and physically smaller, this goes someway to meeting these increases whilst still being able to fit into existing data centre buildings. In certain circumstances, new facilities will indeed need to be built if there is not enough space, power and cooling.

Disaster Recovery – In order to ensure the integrity of user data as it is transacted, processed and stored, it is usual for this to be supported out of two data centres. Users have access to both of these facilities and their data is also stored in both locations. A catastrophic failure of one of these data centres should not affect user service as they will be redirected to the alternate site – wherever possible, with no knowledge or impact to the customer.

Ownership – Dependent on the size and internal policies of a company, there are several ways to support their computing needs.

- Self owned – A company builds and runs their own data centre(s). These can be located within the same building as the business personnel or remotely in a stand-alone building dedicated to the operation of the data centre.

- Colocation – A growing trend – certainly for smaller businesses – is to give the operational activities of running a data centre over to a third party company – only the IT kit itself is owned and operated by their clients. These (colocation) companies will sub-divide the internal space within the data centre into various usable areas that can accommodate the specific requirements of their clients.

- Cloud – In this option, not only is the facility operated by a third party but all of the IT equipment as well. This allows a company to concentrate on their business itself and not be concerned with the purchase or operation of servers, storage or networking equipment. The client takes advantage of the applications installed on the IT equipment running as a ‘service’ and ‘in the cloud’.

What is the structure/design of a data centre?

Data Hall(s) – At the heart of the data centre, there are one or more data halls that house the computers, data storage and networking equipment. The majority of this equipment is small and is installed in purpose-built cabinets, however, in certain circumstances, the equipment is large enough to stand on its own. All of this equipment collectively generates a lot of heat into the data hall that needs to be removed in order for the temperature within the data hall to remain within design specifications of the data centre.

Traditionally, all of this equipment is organised into rows of cabinets and placed on a raised floor. This floor is made up of removable 600mm x 600mm floor tiles, which sit on pedestals at each corner of the tile, and dependent on design and size of room, the height of this under-floor space can typically be from 250mm to 1000mm. This enables two things; 1) it allows cold air to be blown into the under-floor space from air handling units and distributed across the full floor space and directed through floor grilles (as part of the raised floor). These grilles are placed at specific points that allow maximum efficiency for cooling the IT equipment in the room. 2) it provides a space for power and data cabling to be run. The power cables provide electricity to the IT equipment and the data cables interconnect this equipment as required.

The above description is only one of many designs. These can vary for a number of reasons but include the option to omit the raised floor and locate the equipment directly onto the floor ‘slab’. Also, overhead cabling is sometimes preferred – this is routed via overhead cable trays to the relevant equipment.

In the data hall, you will also find services that provide lighting, smoke/fire detection and fire suppression, humidity control, access control and CCTV.

Outside of the data hall, there are systems that provide power and cooling to the data halls.

Electrical Infrastructure – Electric cables routed from the public power utility’s sub-station enter the data centre at very high voltage and travels through various transformers, automated and manual switches and distribution units and arrives at every piece of IT equipment cabinet at the correct voltage and capable of supporting all the equipment in that cabinet.

The electrical configuration will also include an Uninterruptible Power Supply (UPS). This consists of a bank of batteries and automatic switches and, in case of power failure, the batteries seamlessly take over the load immediately following the power failure. These batteries will only support the load for a few minutes but during this time, one or more diesel generators will automatically start and once fully running, they will take over the load until such a time when the utility power returns and is stable once again.

Mechanical Infrastructure – The cooling system consists of a number of different elements including external cooling towers or heat exchangers, chillers and air handling units – all interconnected using valves and pipework that either delivers cold water to where it is needed or removes hot water after it has been used to cool the data hall(s) and other necessary rooms.

Both the mechanical (cooling) and electrical systems can be configured to have various components that are not needed under normal operation but these will be used if a similar component develops a fault and has to be shut down. So, with this built-in redundancy, there is no effect to the operation of the IT equipment and, therefore, no impact to customer service. There are many various degrees of redundancy and resilience built into the M&E design – this being determined by the criticality of the business operations and processes.

Free Cooling – The power consumption to operate the air conditioning system is considerable, however, this can be reduced substantially by designing the system to take advantage of ‘free cooling’. By incorporating specialist equipment, this enables the power-hungry chillers to be automatically powered down when the outside air is below a pre-determined temperature whilst the system operates as normal and continues to cool the required areas.

Why do data centres need to be kept cool?

Data centres need to be kept cool as, ‘heat accounts for 55% of electronic failure’ according to Cisco. Servers, data storage and networking equipment run twenty-four-seven every day of the year, generating extraordinary amounts of heat. As cold air is sucked into the equipment (normally from the front), it passes over all of the electronic components (thereby keeping them from overheating) and is exhausted out from the back or the side of the equipment having increased in temperature considerably. As an example (only), air at 20degC entering the equipment is exhausted at 40degC. The expelled hot air rises and finds it way to the air conditioning units located around the data hall where it is cooled so that it can be re-circulated through the floor grilles and back into the room at the correct temperature in order to re-enter the equipment again. In order to protect against failure and slow processing, the equipment needs to be kept at a constant temperature every minute of every day. As mentioned above, there are many cooling solutions available on the market that cater for a variety of data centres and technical rooms.

Flickr – Shawn Parker – tehgipster

An essential element in maintaining and protecting a data centre is regular cleaning of all technical spaces. Data centre cleaning is now an essential practice of any room containing electrical equipment. It is a vital component in reducing the risk of critical equipment failure due to dust contamination. Where there is evidence of debris or dust, due to the continuous movement of cooling air within the data halls and other electrical rooms, this contamination will quickly find its way to the air inlets of the equipment and clog up these areas as well as the fans which are used to pull the air through the kit. Insufficient cold air travelling through the equipment will lead to overheating and this will result in the processing power of the equipment to be reduced or, in a worst-case scenario, for the kit to fail completely.

Frequency of cleaning can depend on the size of the technical area and the amount of contamination the room is exposed to – the latter happens every time a person enters the room.

During final commissioning of a new-build data centre, it is essential for a thorough builder’s clean to be undertaken as part of the development contract. However, this is nowhere near the level of cleanliness that is required before IT and electrical equipment can be powered on. A deep clean is necessary and should be conducted to the level of cleanliness appropriate for the facility in question.

It is impossible to maintain cleanliness to the desired levels due to the amount of human traffic entering the rooms on a daily basis either to maintain or install equipment; therefore, it is also important to carry out specialist cleaning on a regular basis (6 or 12 monthly) to preserve the desired level of cleanliness. The owner of the data centre will decide the level of cleaning they require.

There is more information regarding our data centre cleaning service here.

This extensively updated version has been written/edited by Roy Culligan, a global expert in critical facilities.

Sources – 1 – 2 – 3 – 4

Tell us how big your data centre, server room or comms room is, and how often you want it cleaned. We’ll be able you provide you a quote. Email – Request form or call 0800 013 2182

Data Centre Cleaning

8 Reasons why Capital offer the best data centre cleaning service

What is Hardware Auditing? Hardware Asset Management & Inventory

Hardware Auditing

Preventative Maintenance